Terraforming a Laravel application using AWS ECS Fargate – Part 5

Elastic Container Service

We have reached the final part of this series. In this post we will be configuring the ECS cluster.

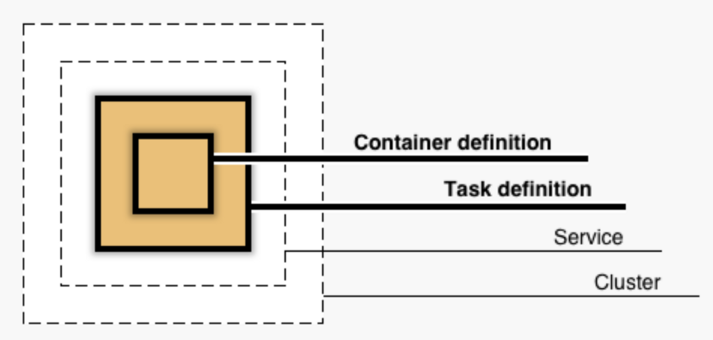

ECS consists of 4 different layers. The innermost layer consists of the container definition. Containers are run as tasks, which requires a task definition. The task definitions are kept running by a service. The services are run inside an ECS cluster.

Create the file ecs.tf in the Terraform repo and name the cluster

resource "aws_ecs_cluster" "ecs_cluster" {

name = "${var.app_name}-${var.app_env}-cluster"

tags = {

Name = "${var.app_name}-ecs"

Environment = var.app_env

}

}

Web application

Add the environment variables which are necessary for the Laravel application, queue worker role and the scheduler role. The env_vars environment variables are part of the Laravel application. Please use a more secure method to store the environment variables.

locals {

env_vars = [

{

"name" : "APP_ENV",

"value" : "production"

},

{

"name" : "APP_KEY",

"value" : "base64:jiuup8dpsFJDH/VW1xwJGzvcdY2eVNhg358fuB4JFgA="

},

{

"name" : "APP_NAME",

"value" : "LiteBreeze Laravel ECS example"

},

{

"name" : "APP_DEBUG",

"value" : "false"

},

{

"name" : "APP_URL",

"value" : "${aws_alb.ecs_alb.dns_name}"

},

{

"name" : "BROADCAST_DRIVER",

"value" : "log"

},

{

"name" : "CACHE_DRIVER",

"value" : "redis"

},

{

"name" : "FILESYSTEM_DISK",

"value" : "s3"

},

{

"name" : "QUEUE_CONNECTION",

"value" : "redis"

},

{

"name" : "SESSION_DRIVER",

"value" : "redis"

},

{

"name" : "SESSION_LIFETIME",

"value" : "120"

},

{

"name" : "LOG_CHANNEL",

"value" : "stderr"

},

{

"name" : "LOG_LEVEL",

"value" : "error"

},

{

"name" : "AWS_DEFAULT_REGION",

"value" : "${var.aws_region}"

},

{

"name" : "AWS_BUCKET",

"value" : "${aws_s3_bucket.ecs_s3.bucket}"

},

{

"name" : "REDIS_CLUSTER_ENABLED",

"value" : "true"

},

{

"name" : "REDIS_HOST",

"value" : "${aws_elasticache_replication_group.ecs_cache_replication_group.primary_endpoint_address}"

},

{

"name" : "REDIS_CLIENT",

"value" : "phpredis"

},

{

"name" : "DB_CONNECTION",

"value" : "mysql"

},

{

"name" : "DB_HOST",

"value" : "${aws_db_instance.ecs_rds.address}"

},

{

"name" : "DB_PORT",

"value" : "3306"

},

{

"name" : "DB_DATABASE",

"value" : "${aws_db_instance.ecs_rds.db_name}"

},

{

"name" : "DB_USERNAME",

"value" : "${aws_db_instance.ecs_rds.username}"

},

{

"name" : "DB_PASSWORD",

"value" : "${random_password.db_generated_password.result}"

}

]

container_worker_role = [

{

"name" : "CONTAINER_ROLE",

"value" : "worker"

}

]

container_scheduler_role = [

{

"name" : "CONTAINER_ROLE",

"value" : "scheduler"

}

]

}

We will next create the web server task definitions. We configure the NGINX, PHP-FPM sidecar definitions in the container_definitions block. Please also note the volumesFrom and volumes block, allowing the code volume of PHP container to be available in the NGINX container. I have used a small task instance with 1 GiB of RAM and 0.5 vCPU. I have further distributed the hardware resources to NGINX and PHP-FPM. Please use a task instance which is necessary to support your code efficiently:

resource "aws_ecs_task_definition" "ecs_task_webserver" {

container_definitions = <<DEFINITION

[

{

"name": "nginx",

"image": "chynkm/nginx-ecs:latest",

"essential": true,

"logConfiguration": {

"logDriver": "awslogs",

"options": {

"awslogs-group": "${aws_cloudwatch_log_group.ecs_webserver_logs.id}",

"awslogs-region": "${var.aws_region}",

"awslogs-stream-prefix": "${var.app_name}-${var.app_env}-nginx"

}

},

"portMappings": [{

"containerPort": 80,

"hostPort": 80

}],

"dependsOn": [{

"containerName": "php",

"condition": "HEALTHY"

}],

"volumesFrom": [{

"sourceContainer": "php",

"readOnly": true

}],

"healthCheck": {

"command": [

"CMD-SHELL",

"curl -f http://localhost/health_check || exit 1"

],

"interval": 5,

"timeout": 2,

"retries": 3

},

"memory": 256,

"cpu": 256

},

{

"name": "php",

"image": "chynkm/laravel-ecs:latest",

"essential": true,

"logConfiguration": {

"logDriver": "awslogs",

"options": {

"awslogs-group": "${aws_cloudwatch_log_group.ecs_webserver_logs.id}",

"awslogs-region": "${var.aws_region}",

"awslogs-stream-prefix": "${var.app_name}-${var.app_env}-php"

}

},

"environment": ${jsonencode(local.env_vars)},

"portMappings": [{

"containerPort": 9000

}],

"volumes": [{

"name": "webroot"

}],

"healthCheck": {

"command": [

"CMD-SHELL",

"nc -z -v 127.0.0.1 9000 || exit 1"

],

"interval": 5,

"timeout": 2,

"retries": 3

},

"memory": 768,

"cpu": 256

}

]

DEFINITION

family = "${var.app_name}-${var.app_env}-webserver-task"

requires_compatibilities = ["FARGATE"]

network_mode = "awsvpc"

memory = "1024"

cpu = "512"

execution_role_arn = aws_iam_role.ecs_tasks_execution_role.arn

task_role_arn = aws_iam_role.ecs_tasks_execution_role.arn

tags = {

Name = "${var.app_name}-ecs-webserver-task"

Environment = var.app_env

}

}

Next step is to configure the Service to run the task definition. We run the service in the public subnet and configure the ALB target group information.

resource "aws_ecs_service" "ecs_service_webserver" {

name = "${var.app_name}-${var.app_env}-ecs-webserver-service"

cluster = aws_ecs_cluster.ecs_cluster.id

task_definition = aws_ecs_task_definition.ecs_task_webserver.arn

launch_type = "FARGATE"

desired_count = 1

enable_execute_command = true

network_configuration {

subnets = aws_subnet.ecs_public.*.id

assign_public_ip = true

security_groups = [aws_security_group.ecs_tasks.id]

}

load_balancer {

target_group_arn = aws_lb_target_group.ecs_alb_tg.arn

container_name = "nginx"

container_port = 80

}

depends_on = [aws_lb_listener.ecs_alb_http_listener]

tags = {

Name = "${var.app_name}-ecs-webserver-service"

Environment = var.app_env

}

}

Auto scaling containers

Next, we configure the auto scaling-up and scaling-down policy for the service. Configure the max_capacity and number of tasks to be increased/removed depending on your needs. I am scaling up and reducing the containers by 1 task.

resource "aws_appautoscaling_target" "ecs_target" {

max_capacity = 10

min_capacity = 1

resource_id = "service/${aws_ecs_cluster.ecs_cluster.name}/${aws_ecs_service.ecs_service_webserver.name}"

scalable_dimension = "ecs:service:DesiredCount"

service_namespace = "ecs"

}

resource "aws_appautoscaling_policy" "ecs_policy_scale_up" {

name = "${var.app_name}-${var.app_env}-scale-up"

policy_type = "StepScaling"

resource_id = aws_appautoscaling_target.ecs_target.resource_id

scalable_dimension = aws_appautoscaling_target.ecs_target.scalable_dimension

service_namespace = aws_appautoscaling_target.ecs_target.service_namespace

step_scaling_policy_configuration {

adjustment_type = "ChangeInCapacity"

cooldown = 60

metric_aggregation_type = "Maximum"

step_adjustment {

metric_interval_lower_bound = 0

scaling_adjustment = 1

}

}

}

resource "aws_appautoscaling_policy" "ecs_policy_scale_down" {

name = "${var.app_name}-${var.app_env}-scale-down"

policy_type = "StepScaling"

resource_id = aws_appautoscaling_target.ecs_target.resource_id

scalable_dimension = aws_appautoscaling_target.ecs_target.scalable_dimension

service_namespace = aws_appautoscaling_target.ecs_target.service_namespace

step_scaling_policy_configuration {

adjustment_type = "ChangeInCapacity"

cooldown = 60

metric_aggregation_type = "Maximum"

step_adjustment {

metric_interval_upper_bound = 0

scaling_adjustment = -1

}

}

}

We will use the CPU metric for scaling up/down of the ECS tasks. Hence, we will create a CloudWatch alarm, which triggers the scale up and down for the webserver service.

resource "aws_cloudwatch_metric_alarm" "ecs_policy_cpu_high" {

alarm_name = "${var.app_name}-${var.app_env}-cpu-scale-up"

comparison_operator = "GreaterThanOrEqualToThreshold"

evaluation_periods = "2"

metric_name = "CPUUtilization"

namespace = "AWS/ECS"

period = "60"

statistic = "Average"

threshold = "60"

dimensions = {

ClusterName = aws_ecs_cluster.ecs_cluster.name

ServiceName = aws_ecs_service.ecs_service_webserver.name

}

alarm_description = "This metric monitors ECS ${var.app_name} CPU high utilization"

alarm_actions = [aws_appautoscaling_policy.ecs_policy_scale_up.arn]

}

resource "aws_cloudwatch_metric_alarm" "ecs_policy_cpu_low" {

alarm_name = "${var.app_name}-${var.app_env}-cpu-scale-down"

comparison_operator = "LessThanOrEqualToThreshold"

evaluation_periods = "2"

metric_name = "CPUUtilization"

namespace = "AWS/ECS"

period = "60"

statistic = "Average"

threshold = "10"

dimensions = {

ClusterName = aws_ecs_cluster.ecs_cluster.name

ServiceName = aws_ecs_service.ecs_service_webserver.name

}

alarm_description = "This metric monitors ECS ${var.app_name} CPU low utilization"

alarm_actions = [aws_appautoscaling_policy.ecs_policy_scale_down.arn]

}

Queue workers

Next step is to configure the queue workers. We will start by writing an ECS task for the queue workers. Note that we are using the same PHP image which was used for running the webserver. However, we add an additional parameter in the environment variables for changing the container role to run a queue worker. Please also note that I have used a smaller infrastructure instance.

resource "aws_ecs_task_definition" "ecs_task_worker" {

container_definitions = <<DEFINITION

[

{

"name": "php",

"image": "chynkm/laravel-ecs:latest",

"essential": true,

"logConfiguration": {

"logDriver": "awslogs",

"options": {

"awslogs-group": "${aws_cloudwatch_log_group.ecs_worker_logs.id}",

"awslogs-region": "${var.aws_region}",

"awslogs-stream-prefix": "${var.app_name}-${var.app_env}-php"

}

},

"environment": ${jsonencode(concat(local.env_vars, local.container_worker_role))},

"healthCheck": {

"command": [

"CMD-SHELL",

"ps aux | grep -v grep | grep -c 'queue:work' || exit 1"

],

"interval": 5,

"timeout": 2,

"retries": 3

},

"memory": 512,

"cpu": 256

}

]

DEFINITION

family = "${var.app_name}-${var.app_env}-worker-task"

requires_compatibilities = ["FARGATE"]

network_mode = "awsvpc"

memory = "512"

cpu = "256"

execution_role_arn = aws_iam_role.ecs_tasks_execution_role.arn

task_role_arn = aws_iam_role.ecs_tasks_execution_role.arn

tags = {

Name = "${var.app_name}-ecs-worker-task"

Environment = var.app_env

}

}We configure a service to run the worker task.

resource "aws_ecs_service" "ecs_service_worker" {

name = "${var.app_name}-${var.app_env}-ecs-worker-service"

cluster = aws_ecs_cluster.ecs_cluster.id

task_definition = aws_ecs_task_definition.ecs_task_worker.arn

launch_type = "FARGATE"

desired_count = 1

enable_execute_command = true

network_configuration {

subnets = aws_subnet.ecs_public.*.id

assign_public_ip = true

security_groups = [aws_security_group.ecs_tasks.id]

}

depends_on = [aws_ecs_service.ecs_service_webserver]

tags = {

Name = "${var.app_name}-ecs-worker-service"

Environment = var.app_env

}

}

Scheduler

We will create a task definition for running the scheduler. This will use the same Laravel(PHP) image, but with an additional environment variable that will run the scheduler command in the container. I have used the same infrastructure instance as the queue worker.

resource "aws_ecs_task_definition" "ecs_task_scheduler" {

container_definitions = <<DEFINITION

[

{

"name": "php",

"image": "chynkm/laravel-ecs:latest",

"essential": true,

"logConfiguration": {

"logDriver": "awslogs",

"options": {

"awslogs-group": "${aws_cloudwatch_log_group.ecs_scheduler_logs.id}",

"awslogs-region": "${var.aws_region}",

"awslogs-stream-prefix": "${var.app_name}-${var.app_env}-php"

}

},

"environment": ${jsonencode(concat(local.env_vars, local.container_scheduler_role))},

"memory": 512,

"cpu": 256

}

]

DEFINITION

family = "${var.app_name}-${var.app_env}-scheduler-task"

requires_compatibilities = ["FARGATE"]

network_mode = "awsvpc"

memory = "512"

cpu = "256"

execution_role_arn = aws_iam_role.ecs_tasks_execution_role.arn

task_role_arn = aws_iam_role.ecs_tasks_execution_role.arn

tags = {

Name = "${var.app_name}-ecs-scheduler-task"

Environment = var.app_env

}

}

Finally, we will create a service to run the scheduler task.

resource "aws_ecs_service" "ecs_service_scheduler" {

name = "${var.app_name}-${var.app_env}-ecs-scheduler-service"

cluster = aws_ecs_cluster.ecs_cluster.id

task_definition = aws_ecs_task_definition.ecs_task_scheduler.arn

launch_type = "FARGATE"

desired_count = 1

enable_execute_command = true

network_configuration {

subnets = aws_subnet.ecs_public.*.id

assign_public_ip = true

security_groups = [aws_security_group.ecs_tasks.id]

}

depends_on = [aws_ecs_service.ecs_service_webserver]

tags = {

Name = "${var.app_name}-ecs-scheduler-service"

Environment = var.app_env

}

}

Execute the following command to generate a plan for the above infrastructure.

terraform plan -out plans/ecs

The command will output:

Terraform will perform the following actions: # aws_appautoscaling_policy.ecs_policy_scale_down will be created # aws_appautoscaling_policy.ecs_policy_scale_up will be created # aws_appautoscaling_target.ecs_target will be created # aws_cloudwatch_metric_alarm.ecs_policy_cpu_high will be created # aws_cloudwatch_metric_alarm.ecs_policy_cpu_low will be created # aws_ecs_cluster.ecs_cluster will be created # aws_ecs_service.ecs_service_webserver will be created # aws_ecs_task_definition.ecs_task_webserver will be created # aws_ecs_service.ecs_service_scheduler will be created # aws_ecs_service.ecs_service_worker will be created # aws_ecs_task_definition.ecs_task_scheduler will be created # aws_ecs_task_definition.ecs_task_worker will be created Plan: 12 to add, 0 to change, 0 to destroy.

Review the plan and apply the changes.

terraform apply plans/ecs Apply complete! Resources: 12 added, 0 changed, 0 destroyed.

Commit and push the changes to the repository. All the codes related to the above section are available in this GitHub link.

We have added PHP codes that will insert data into RDS. Hence, we need to connect to the RDS instance and execute the following SQL. The master password is available in the SSM parameter store. I leave this task to the reader as a challenge.

CREATE TABLE `logs` ( `id` int NOT NULL AUTO_INCREMENT, `created_at` datetime NOT NULL, PRIMARY KEY (`id`) ) ENGINE=InnoDB DEFAULT CHARSET=utf8mb4 COLLATE=utf8mb4_unicode_ci;

The next step is to verify all the functionality. Navigate to the AWS dashboard EC2 section and fetch the load balancer(ALB) DNS name. Paste the following URL in the browser:

ALB-URL/q

This will log the IP address in the logging file by using the worker queue. Navigate to the CloudWatch logs related to the worker task to view the output.ALB-URL/s3

This will create a new file in the S3 bucket.ALB-URL/rds

This will create an entry in the RDS MySQL databaselogstable.

The whole series of blog posts demonstrates the working of ECS Fargate to host a LEMP(Laravel) application.

Destroying the infrastructure

Login to AWS console and delete the contents of the S3 bucket ${var.app_name}-${var.app_env}-assets

Execute the following command to delete all the AWS resources

terraform destroy --auto-approve

Posts in this series

Part 1 – Getting started with Terraform

Part 2 – Creating the VPC and ALB

Part 3 – Backing services

Part 4 – Configuring the LEMP stack in Docker

Part 5 – ECS